Initially planned as a pocket book but detour due to non availablity of massive printing and distribution expenses. Also putting this up as a blog post will provide a further capability of updating this as and when needed. Will try to stick to chronological order as far as possible. But if due to some reason I deviate from the actuals please point it out by sending a post on platform X tagging jijutm or feel free to stamp a comment to this post.

As a preface this is a story of my transformation from a meager DTP operator in 1987 to an AWS Solution Architect in 2020. As anyone can imagine I have gone through all hazards and over many speed breakers during the period.

This is not a story of overnight success or a linear path to achievement. It’s a story of continuous learning, adaptation, and a relentless pursuit of solutions. From my early days tinkering with technology to leading complex cloud migrations and developing serverless architectures for major organizations, my journey has been filled with unexpected turns, challenges, and opportunities. This book is a reflection on those experiences—the triumphs, the setbacks, and the lessons learned along the way. It’s a testament to the power of resourcefulness, the importance of community, and the ever-evolving landscape of technology. Whether you’re a seasoned technologist, just starting your career, or simply curious about the world of software and cloud computing, I hope this story inspires you to embrace change, find creative solutions, and never stop learning.

Early Days and First Encounters with Technology

1987 just out of college – Sreenarayana College, Chempazhanthy started loitering around a multi business center run by few friends near Medical College Junction, named Pixel Graphics where they cater to thesis reports of medical students and the sort. With word processing, large font titles for separation pages, plastic spring binding, photocopying and a long distance telephone booth. This is where I got my first exposure to production systems with software like Gem First Publisher, Lotus 123, Wordstar and printing on an 8 pin dot matrix printer. Within no time I learned the intricacies of word processing using wordstar and page layout tweaking using dot commands in wordstar. Later on for better quality output I borrowed an electonic typewriter from another establishment run by couple of friends, twin brothers and interfaced the same to our computer and started printing from wordstar to this device. It was during this time that I got interested in computer hardware and enrolled into a certificate course in Universal Institute of Technologies near press club trivandrum. There Shaji Sir played a pivotal role in shaping up my dreams and the hardware maintenance and assembling course was completed in stipulated time. Continuing this the institution offered me the post of hardware engineer on contract.

This continued until I decided to split out and start a separate unit in another part of the city. Where I had my desktop pc, scanner, laser printer a very modest one at that time the HP LaserJet 4L with max 300 dpi and updated myself into PageMaker and Corel Draw. The renowned engineering text book author Dr C. E. Justo after getting few samples done by me had selected me to do the drawings for his updated and revised edition of High Way Engineering. Actually the samples were couple of machine parts and few graphs. There was no data only xerox copy from older version with some corrections. Well the machine parts were manually drawn using vector components and functions in corel draw and the graph I created with arbitary values cooked up by me by checking with the supplied drawing and was created in Excel and exported as image to Adobe Photoshop where the resolution was increased manually.

Dubai and the Implementation Project

My first significant professional experience came when I took on an implementation contract with Al-fajr Print Media in Dubai. My task was to automate their business directory production process. This involved working with existing software and hardware to create a more efficient workflow. I successfully implemented a crucial automation system, solved numerous technical problems, and even earned a reputation as a reliable and knowledgeable technologist in the local community.

To explain it a bit, their process was like the same data is entered into a billing system in Microsoft Access on Windows and on Excel for Mac for sorting and then copied into a layout on Quark Express for printing. The implementation which I did was a kind of automation with Microsoft Access VBA script would export the data as Quark Express layout scripts which could be run directly on from Quark Express Script Basket on the Mac where the layout will happen automatically.

By 1995, my implementation contract with Alfajr Print Media in Dubai had come to an end, and I returned to my hometown. While my time there had been invaluable, giving me practical experience in implementing real-world solutions. However, I realized that formalizing my skills with a recognized certification would significantly enhance my career prospects. I decided to pursue the Microsoft Certified Systems Engineer (MCSE) certification. The program involved rigorous study and a series of challenging exams covering topics like Windows NT Server, networking protocols, and system administration. My experience in Dubai, particularly my work with Windows systems and networking at Alfajr Print Media, proved to be a valuable foundation for my MCSE studies. The hard work and late nights paid off in 1997. I vividly remember the moment I received confirmation that I had passed all the required exams and officially earned my MCSE certification. It was a tremendous feeling of accomplishment. During this time, I was an avid reader of PCQuest, one of the most popular computer magazines in India. I particularly enjoyed the articles by Atul Chitnis.

Transition to Linux

In December of 1997, inspired by his insightful articles, I decided to take a leap of faith and travel to Bangalore to meet him. Resources were limited, so I ended up hitching rides for a significant portion of the journey. Over three days, I managed to get free lifts in eight different trucks. Finally, I arrived in Bangalore and managed to connect with Mr. Chitnis. Meeting him was a truly inspiring experience. As I was preparing to leave, he handed me a couple of floppy disks. ‘Try this out,’ he said, with a slightly mischievous glint in his eye. He then added a word of caution: ‘This is an operating system. If you’re not careful, you could easily screw up your existing operating system installation, so proceed with caution.’ The return journey to Trivandrum was a stark contrast to the arduous hitchhiking trip to Bangalore. Thanks to Mr. Chitnis and his local connections, I was able to secure a direct ride in a truck heading towards my hometown.

Back home in Trivandrum, I was eager to explore the contents of the floppies. Remembering his warning about the potential to damage my existing Windows installation, I decided to take a precautionary step. I swapped the hard disk in my system—the same one I had brought back from Dubai—for a new, blank drive. With the new hard disk in place, I inserted the first floppy and booted up my computer. What followed was my first encounter with Linux. The floppies contained Slackware Linux 3.3, a distribution that had been released in October of that year. My initial forays into Linux with Slackware quickly evolved into a deeper engagement with the open-source community.

I became actively involved with ILUG (India Linux Users Group), a vibrant community of Linux enthusiasts across India. I even had the opportunity to give a few talks at in-person events in Trivandrum, sharing my knowledge of Linux system administration and networking. After Slackware, I transitioned to Red Hat Linux, and then, in early 2004, I started using Fedora.

It was in 1998 there was this logtech systems with their internet surfing center at vazhuthacaud with high speed internet connection shared through windows and one day trial of spoon proxy. They had to reinstall the system every day to extend the trial of the proxy software. Suggested and took the initiative to shift whole system to linux, squid and socks proxy, which was executed in a few hours and the whole team was satisfied with the transition.

Building My Own Business

In the early 2000s, I and two of my close friends decided to take the plunge and start our own software company. Our first major client came to us with a request to develop custom software for a binary multi-level marketing system. My friend, who was our Java expert, raised a valid concern: MySQL 3.20, the version we were initially planning to use, lacked transaction support. After some digging online, I discovered that a newer, unreleased version of MySQL—version 3.23—had the potential for transaction support. The catch? It was only available as source code. I had some experience with compiling software from source, so I took on the challenge. After a few late nights and some careful configuration, I successfully compiled MySQL 3.23 release candidate. We then rigorously tested the transaction functionality directly from the command-line interface, ensuring that it worked as expected. After careful consideration and weighing the risks, my friend and I decided to go ahead and use this release candidate in the production servers for our client’s project.

By 2005, our company had become recognized as experts in MLM software development. This recognition was largely due to a unique tool I had developed: a plan evaluation simulator. This simulator could take an MLM plan as a configuration array—essentially a structured set of data that defined the plan’s rules and structure. From this configuration, the simulator could calculate the breakout period and populate a database table with numerical node names to represent the full network structure. This simulator was a game-changer for our clients.

As our company continued to grow, we realized the importance of clearly defining our roles and responsibilities. One of my partners, who had a remarkable ability to connect with clients and a strong understanding of financial matters, took on the dual role of CEO and Finance Manager. Our Java programmer friend naturally transitioned into the role of Project Manager. With my extensive software experience, multiple certifications including MCSE and RHCE, and deep understanding of hardware, it was a natural fit for me to take on the role of CTO. Our success with MLM projects allowed us to expand significantly. We outgrew our initial setup and moved into a proper office space near the Thiruvananthapuram Medical College.

Integration of Church Directory

In 2002, I was approached by organizers from a nearby church, the immanuel marthoma church paruthippara, who needed help creating an interactive CD-based directory of their members. They wanted to include details about each family and individual, along with photographs. I suggested using Microsoft Excel for the textual data and a structured folder system for the photos, using edavaka register number and serial numbers to link the data. The interactive CD was created using Macromedia Flash, with each family having individual SWF files and a single loader interface. With around 3500+ members across 800 families in the church, this could have been a herculean task. But my early devops instinct along with VBA sendkeys macro, macromedia flash was controlled from microsoft excel and the layout was done by my system only with myself sitting back and watching the activities onscreen.

Five years later, I received another call from the same parish. They were now looking to create a printed version of their member directory. They had diligently maintained the data in the Excel spreadsheet using the structure we had established for the CD project. By this time, I had become quite proficient in PHP programming and had started using the FPDF library extensively for PDF generation. I was also experimenting with GNU Make for basic task orchestration. This combination of tools provided the perfect solution. I created a series of PHP and shell scripts, each responsible for a specific part of the process, and then used GNU Make to orchestrate the execution of these scripts. The commands were: make import, make layout, make pdf, make index, and make binding. This orchestrated workflow, controlled by GNU Make, allowed me to automate the entire print publication process. The approach I developed for generating the print directory has proven to be so effective that it’s still being used today. The church revises the directory every five years, and I’ve continued to be involved in this process. Recently, recognizing the importance of preserving this knowledge and making it easier for others to learn the process, I decided to create a video demo using OBS Studio and Openshot video editor and the final is hosted on YouTube. http://bz2.in/82jbxu .

Scaling and Optimizing for Growth

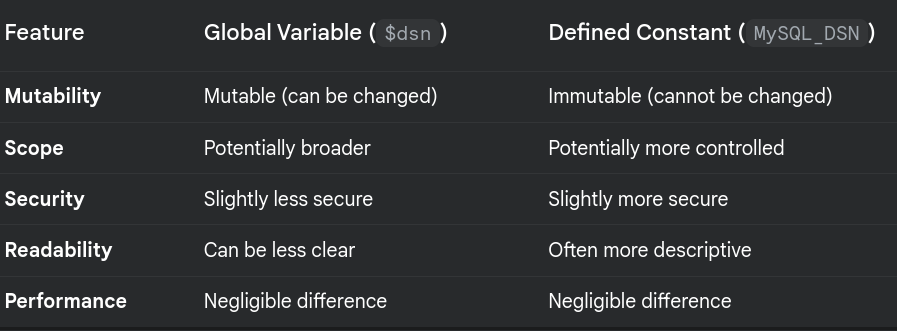

We also started expanding our team, hiring new staff members through direct recruitment and referrals. Within the team, there were always friendly debates, particularly between me and my Java programmer friend, about the merits of PHP versus Java. One day, during one of these debates, I decided to settle the matter with a quick demonstration. I created a simple PHP page with just this code.

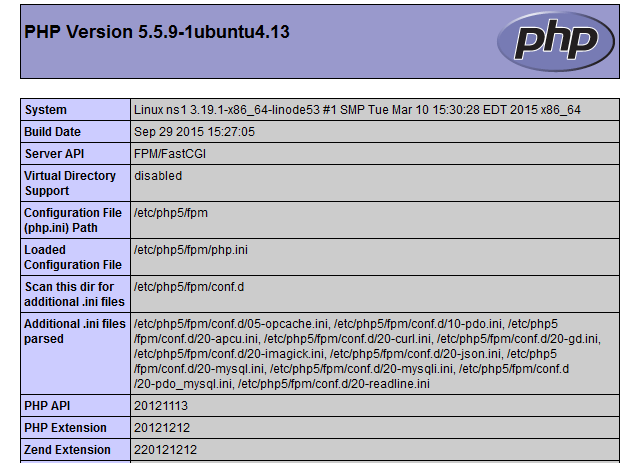

<?php phpinfo(); ?>

I opened the page in a browser, and in a matter of seconds, a detailed report appeared. Which is similar to what is shown here.

I then challenged my Java programmer friend to produce a similar output in the same timeframe using Java. He then, with a good-natured sigh, admitted defeat. ‘Okay, okay,’ he conceded, ‘PHP is better… for this at least.’ Towards the end of 2005, we were facing a frustrating and recurring problem: employee attrition. We were investing significant time and resources in recruiting and training new team members, only to see them leave after just three to six months, often citing offers from companies located within Trivandrum Technopark. We discussed this internally and decided that we needed to secure a Technopark address, “by hook or crook.” By 2006, our CEO managed to forge a mutually beneficial association with another company already located within the park, sharing their office space. By 2008, our company had grown considerably, and the space-sharing arrangement within Technopark was no longer sustainable. Our CEO focused his efforts on securing external investment. His hard work paid off, and he managed to convince a major investor to back our company. This influx of capital allowed us to make a significant upgrade: we moved into a spacious 40-seater office within Technopark.

As resources increased, we quickly ran into a network bottleneck. Our existing network was a simple wired setup using a hub with only six ports. Recognizing this limitation, I suggested that we make a more significant upgrade: transitioning to a wireless network. This would allow us to easily add new systems simply by installing wireless network cards. We opted for relatively inexpensive PCI Wi-Fi cards from D-Link, which used Atheros chipsets. However, these cards didn’t have native Linux drivers at the time, meaning they wouldn’t work out of the box with our Fedora systems.

Fortunately, I was familiar with a tool called ndiswrapper. I took on the task of wrapping the ndiswrapper drivers supplied with the D-Link cards. After some careful work, I managed to get the Wi-Fi cards working perfectly. This was a significant accomplishment. News of my success with the D-Link Wi-Fi cards and ndiswrapper quickly reached the distributor. They were facing a major problem at Calicut University, where they had supplied 200 of these PCI cards. The university was running Fedora Linux exclusively, and as a result, none of the cards were working. The distributor, along with the marketing manager for D-Link, contacted me and arranged my transportation to Calicut University. With the assistance of the university’s lab assistants, we set to work. Within about three hours, all 200 Wi-Fi cards were up and running. The university staff was extremely grateful, and the distributor was relieved that the payment issue was resolved. I insisted that our developers work in a Linux environment. I believed that Linux provided a superior development experience, with its powerful command-line tools, robust scripting capabilities, and overall stability. Alongside this subversion and jenkins were implemented to automatically deploy any committed code into our local webserver. I also implemented a practice of manual lint checking. To facilitate this, we migrated all developer machines to Fedora. The impact on our code quality was immediately noticeable.

We had bagged a project for creating a web application for a travel agency portal integrating the airline ticketing using Galelio GDS api and further lowcost airline api also.Our technical architects were keen on implementing WordPress frontend as I already had ample exposure in WordPress and about 5 resources were also fully trained in WordPress theme customization and plugin development. At the start itself I was worried about doing this sequentially as there are multiple api calls and front end will have to wait till all the actions are over. Well my instinct and basic nature gave it a deep thought and finally arrived at a solution. Use memcached as a central location. Search information submitted from front end will be handled by a plugin method and normalized into a json structure to be stored into memcache using a request id which will be used until the final stage of action. The front end will now start polling another method of the plugin to look into memcache with the requestid and a results suffix when this gets populated that will have information about how many pages of result is stored in memcache. Now the back end actual search was taken care of using a shell script which will handle multiple php scripts in the background using the & token and watching output from jobs. This effectively utilized the operating system capability of multiple threads to run php jobs in different isolated threads improving the search efficiency by 70% . The first time this happened the whole team welcomed it with a voracious shout and applause.

Further down the years when we reached the final stage of integrating air ticketing solution with gds as well as low cost airlines. The ticketing activity was showing intermittent failures and was identified that the multiple handshake from our server to the airline api which was traversing over the international border was the pain point. For solving this issue I got a leased vps from a US based hosting provider and a broker application was developed to run there. Our colocated server in Cochin would send a payload to the broker in US using http post and the multiple handshake will happen between airline API and our broker and the final information will be sent back again using an HTTP POST to our colocated server to a specific URL to be pushed to the corresponding frontend. This significantly improved the ticketing process part and failures dropped drastically.

Time to expand the airlines solution as we had bagged an order from an established business group to implement the solution in over 4500 business outlets across the country. There was heavy branding requirements and whitelabelling with theme changes. Thanks to WordPress these were a breeze with superior SEO capabilities and few additional custom plugins as well as optimized database structure the implementation took only 30% of the estimated time.

By this time I had authored the open php myprofiler which is a tool to profile mysql queries in a php environment. The basic advantage of this tool was that it does not need any extra installations nor need to learn any new language as it was fully written in php. Hence it was possible to be installed into shared hosting like that provided by GoDaddy or hostinger. Yes it had some limitations, but across different versions as of the time of writing this content the open php my profiler has 10,000 downloads. Check it out on the blog where I may add enhancements and new releases.

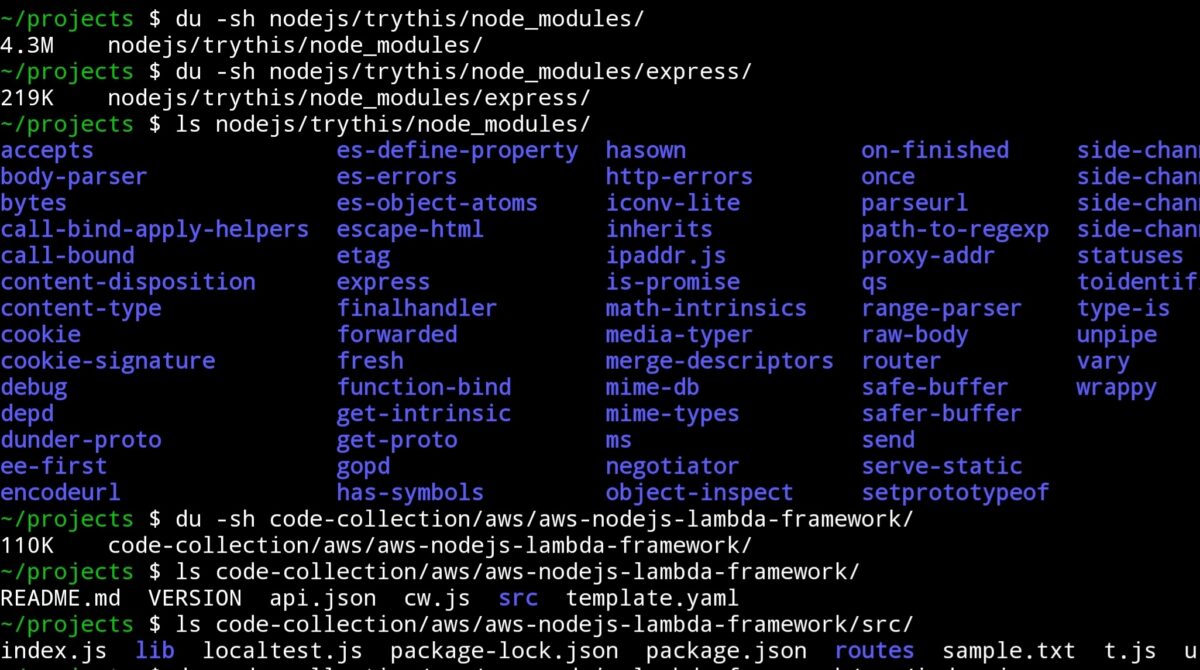

Embracing the Cloud and Serverless

As our business grew, the cost of maintaining our infrastructure became a growing concern. We were paying for both a colocated server in Cochin and a VPS in the US, which added up to a significant expense. After significant assessments and careful analysis of various options, I suggested that we consider migrating our infrastructure to Amazon Web Services (AWS). After some discussion and further evaluation, the management team agreed to proceed with the migration.

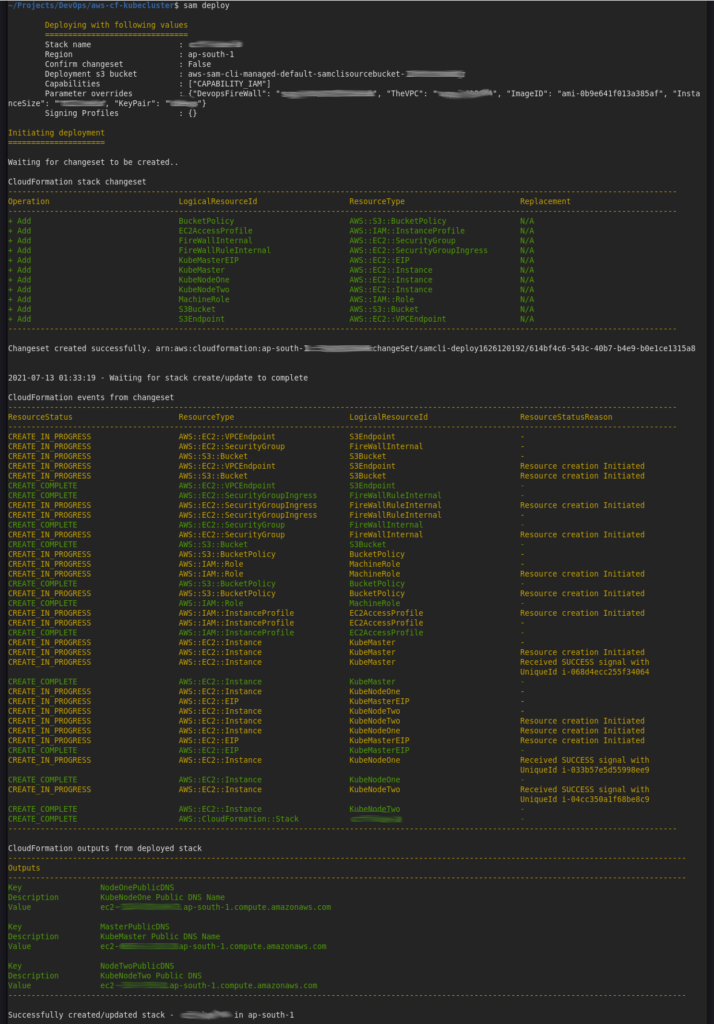

Once we decided to migrate to AWS, I took the lead in implementing the transition. I managed the migration using EC2 for our virtual servers, Elasticache for caching, and S3 for storage. This was a significant improvement over our previous setup. However, I began to explore more advanced services and architectural patterns.

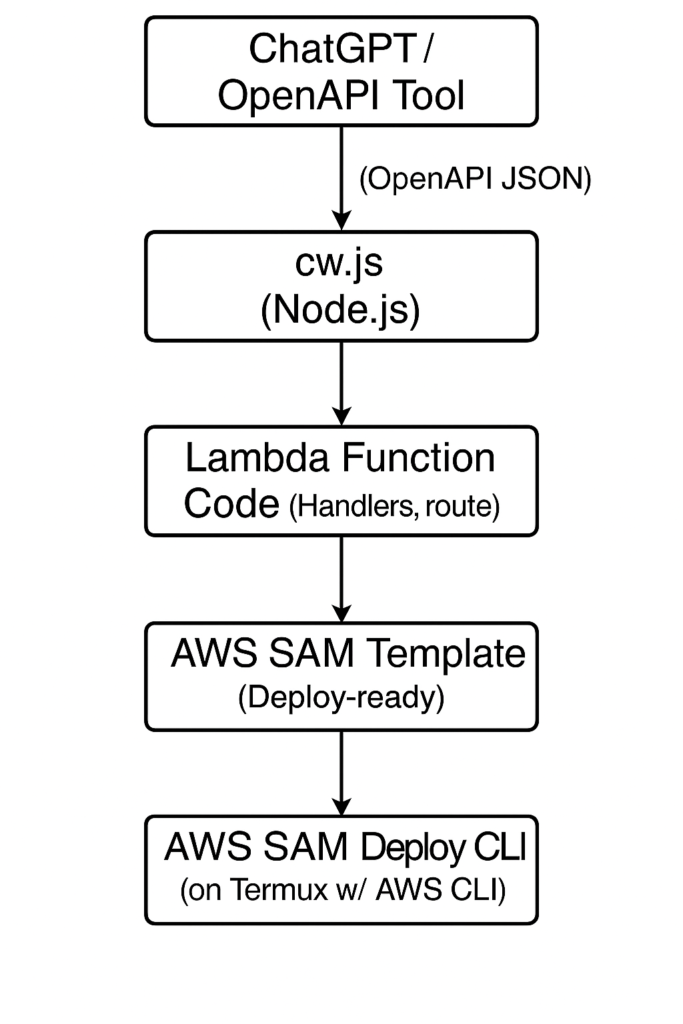

Around the second half of 2010, the state Police Department approached us to develop a crowd management solution. After analyzing their existing processes, I proposed developing a completely new PHP framework that would provide better security and a smaller footprint, leading to improved performance. This led to the creation of phpmf, a lightweight routing framework that I later shared on GitHub. With a size of less than 5KB, phpmf was incredibly efficient. Hosted on AWS EC2 with autoscaling and Elastic Load Balancing (ELB), the solution handled peak traffic of 3-5,000 visitors per minute with ease. Later, in 2015, when AWS announced general availability for Node.js Lambda functions, we decided to migrate the image validation process to a serverless architecture. This involved direct uploads to S3, with S3 events triggering a Node.js Lambda function that would validate the image type and resolution. We also implemented a clever check to catch users who were attempting to upload invalid image files. We discovered that some users were renaming BMP files as JPGs in an attempt to bypass the validation. To detect this, we implemented a check of the file’s ‘magic header’—the first few bytes of a file that identify its true file type.

On a continuation we shifted pdf coupon generation from inline php fpdf system to java based AWS Lambda which increased the achived concurrency from 60 to 400 which is assessed as a 566% improvement in concurrency. From another analysis the cost was also drastically reduced for which I dont have any reference as of now, still a discussion I remember some one stated the cost reduction was about 30% on an overall estimation and comparison with the same period of the previous year.

High-Profile Projects and Continued Innovation

The lessons I learned from these diverse experiences gave me the confidence and technical acumen to design and develop a comprehensive application for Kotak Mahindra Bank. This application incorporated a complex conditional survey that adapted to user responses, providing a personalized experience. I chose to build this application using a completely serverless architecture, leveraging the power and scalability of AWS Lambda, API Gateway, and other serverless services. Static files for the front-end were hosted directly on S3. For the data store, I selected AWS DynamoDB.

Building on the success and experience gained from developing the application for Kotak Mahindra Bank, I next took on an even more ambitious project: the development of a complete serverless news portal and news desk management system for Janmabhoomi Daily, a major news agency. This project presented a unique set of challenges, especially in handling real-time updates and ensuring data consistency across the distributed serverless architecture. We used AWS AppSync for real-time data synchronization between the news desk management system and the public-facing portal. I designed the entire solution using a fully serverless architecture on AWS, leveraging services like Lambda, API Gateway, S3, and DynamoDB. The news portal was designed for high availability and scalability, capable of handling large volumes of traffic during breaking news events. The news desk management system streamlined the editorial workflow, allowing journalists and editors to easily create, edit, and publish news articles. This project further solidified my expertise in serverless technologies.

Since we were heavily into wordpress it was quite natural to create a wordpress plugin to implement open php myprofiler and the sampler which will give the reports as to time taken by each query with respect to the request url. Also the number of queries that a page runs to generate the output is also visible in the sampler output. An expert MySQL Architect can then use the queries with EXPLAIN prefix to understand why a query is taking too long. Thus analysis can pinpoint to inefficient database indexing and optimizing these will make the system run faster.

Deep into AWS and Community Building

By this time I had added few feathers to my cap, the AWS Certified Solution Architect Associate and the AWS Certified DevOps Administrator Associate. Also I was nose deep into active community building with being the co-organiser of AWS UserGroup Trivandrum. Along with this The heavy success of the Crowd Management solution narrated in chapter 6 also kept me in the limelight, and I was invited by AWS for many events to deliver talks about my experiences while shifting from traditional hosting to a serverless mindset.

With the active participation in all these community activities, I was invited to join a beta program by AWS which is currently named as the AWS Community Builder. Being there also I had delivered a lot of sessions to different user groups and few technology summits. I used to frequent out to Chennai, Bangalore, Mumbai and Cochin with relation to these technology summits. Every where I was welcomed with high importance.

Next Phase in the Career

Year 2018, due to some other difficulties, and complications there was a business transfer and our company merged with couple of two others to form a new digital solution company with a major share of resources having deep exposure of SAP and with the certifications and deep knowledge in cloud technologies and networking naturally I was also accepted as CTO into the new conglomerate.

Here once we settled down the then router which was using cisco routing systemwas not enough to handle the whole security and inhouse servers along with bandwidth pooling across two internet service providers. The systems engineer as well as combined CEO was planning to procure new hardware when I intervened and suggested that we utilize a salvaged multihomed rack server which was kept aside because it is not capable of loading windows server operating system. Then to implement pfsense which is a lightweight packetfiltering firewall and utility on top of free bsd. Also it has a versetaile and intutive web interface which can be controlled and configuration as well as monitoring can be done from any standard browser be it desktop or mobile. The implementation was smooth and company saved around 2,00,000 INR in hardware costs. While working there and handling some critical applications I had to take leave for a few days to go to Bangalore on an AWS User Group event. I thought to attempt and configure pptp vpn for my access and it was configured to handle authentication from internal LDAP configured on an Ubuntu server and was being in use by all resources for login. The LDAP though requires manual cli intervention to add or remove login, I had already created a Jenkins project which does the functions in the background with easy to use web frontend from the Jenkins interface.

I was interviewed by CEO Insights Magazine read the article about me in their archives.

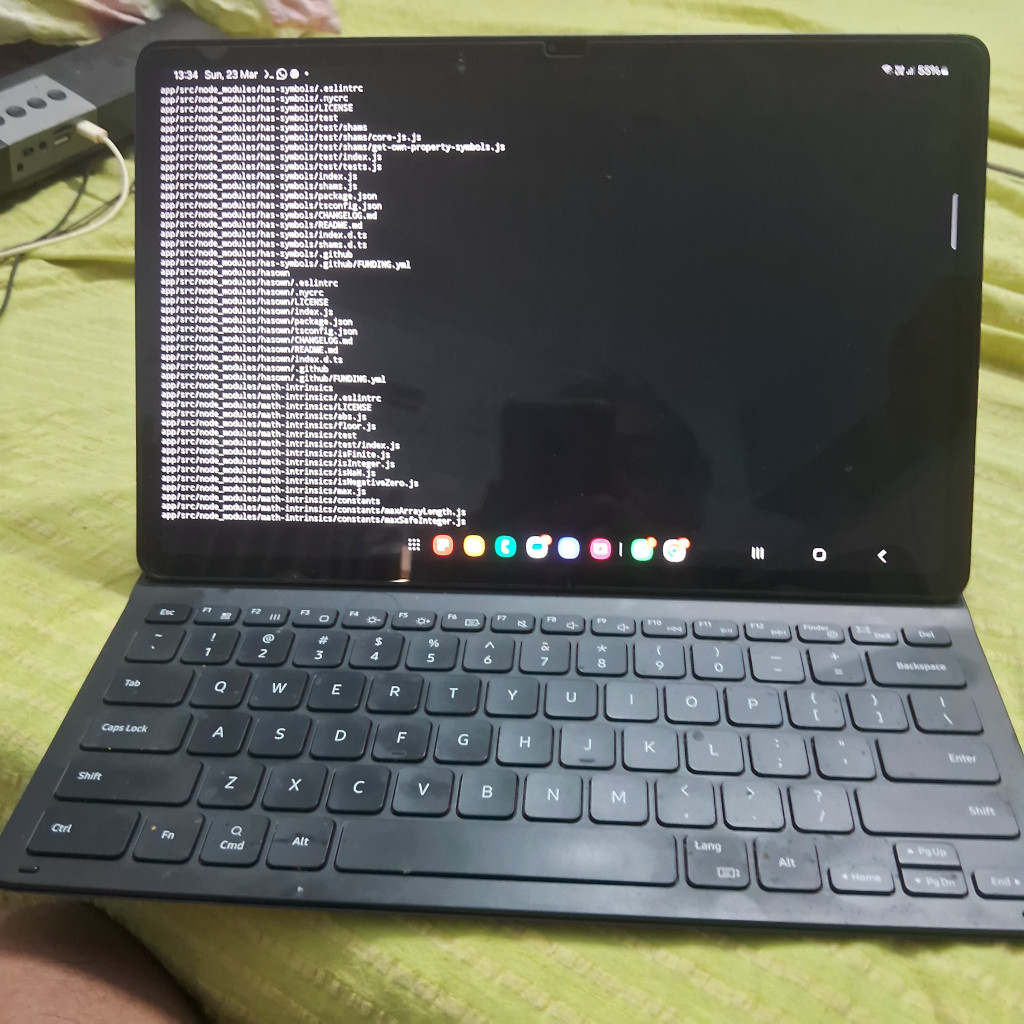

Things were going smooth with many new projects and clients as well as with AWS community and futher till fag end of 2019 when I was prepared to attend the AWS Summit 2020 in Mumbai, the discounted delegate tickets were purchased, and flight tickets were also procured when the pandemic disaster broke all predictions in 2020. Due to the pandemic I being a high value resource was suggested to resign and I did so with June being my completion date due to Knowledge Base Transfer and credentials handover all that was required were documented and I started the handover. But the pfsense and pptp implementation which was done way back came into use for the company as 80% of the resources were able to access the internal hosting and Jenkins interface through VPN without further hardware or configuration expenses. Well with my linkedin network of resources whom I had interacted through community building with ILUG and AWS and otherwise got training under me were quite a lot and few of them did reccommend me in few other establishments and finally in July I got placed as Solution Architect with UST Global, Trivandrum, India with remote facility. CEO Insights Magazine did another interview on me and the article was published on their portal.

As the remote facility was there my working hours were quite flexible and I had plenty of free time which I thought to utilize beneficially. Where I voluteered to support the District Disaster Management Department by taking charge of coordinating several voluteer students from different colleges. The Department incharge was the then Trivandrum District Collector, Dr. Gopalakrishnan a very efficient and dynamic personality who had great appreciation and admiration to me as I had single handedly developed a resource data collection system to collect information about skilled labourers who had migrated from different parts of the country in several construction camps spread around the Trivandrum District. This responsive application was hosted on AWS with the help of AWS team as I could convince them to provide a pandemic support credits for the Department AWS account. I take this opportunity to thank the staff and management of VelosIT technologies in Technopark to favourably permitting us to use their facilities in Trivandrum Technopark for the development of the application. The data collection was deputed to the volunteer students who promptly did the same and finally one day with some data analytics and reports a special train with multiple collection points and enough segregation could be arranged to take the labourers to their respective locations enroute.

The arrangement with UST Global was a contract and had to be renewed every six months well some times the renewal getting delayed my salaries delayed and finally I got really fed up, where I attempted to get another placement in a Consulting setup compromising to some facilities which came into reality in April 2022 as Technical Architect, Quest Global , Technopark Trivandrum, where I did not like the environment and quit very sson. The CEO Insights reporter being a linkedin follower came to know about the job switch and yet another interview got published.

The Community Commitment

Starting from 2004 with https://phpmyib.sourceforge.net/ I had taken it as a commitment to give back to the developer community as I had got a lot from there starting from my first interaction with the renowned PCQuest uathor Atul Chitnis in 1996 from whom I got introduced to Linux. This commitment continued over to https://github.com/jthoma and consistently being updated with scripts and utilities. Also the Open Php My Profiler here is one another detailed tool which shows my proficiency in php and mysql.

Further personal interests

But I had other interests also, which were motor bike riding and agricultural research. Riding on Bike always I tried to be as careful as can be and for almost all rides longer than 20 minutes would wear guards on knees, elbow, gloves with knucle protection as well as shoe with front and back steel padding along with full face helmet. And my luggage will be wrapped in poyurethene sheet and fastened to on the pillion seat. I always ride solo on such trips. Naturally I got involved in couple of biker clubs and am quite active in the Bajaj Avenger Club.

Writing about agricultural research, there is not much it sparked from a parental property and instead of leaving it as such and let the nature breed some waste plant, I started the drive and initially actually during the pandemic time, started planting few leafy vegetables. There was attack of some kind of pests, and after getting advice from youtube and others, first success was with an organic pesticide. Combination of neem leaves, wild turmeric ( Curcuma aromatica ), bird’s eye chili ( Capsicum frutescens ) grinded to paste and mixed with water then strain through a piece of cotton dhoti to be filled in the portable manual pumped sprayer. Quantity finally used was 500 gms of each of the items and 5 litre of well water. Well the harvest was mostly used in our family only and sale was not attempted. I thought it to be waste of time and effort. Had a detailed discussion with our caretaker and decided to attempt plantain cultivation in a medium large scale that would not exhaust the resources. This is where I managed to create an organic fertilizer. Multiple large scale fish cleaning points were identified and fish waste collected from there to be treated with industrial jaggery and fermented yeast for a week and the slurry was used as fertilizer for a marked area of 10 plantains assorted variety plants. The fruit bearing results were really good with one bunch weighing 30Kg and another one 48Kg, now I started selling this to local crop procurement agencies.

We had an attack from a bunch of monkeys and a bats also. Support from youtube and other research online finally decided to try out a suggestion by some farmer who claimed to have implemented it successfully. To deploy rubber snakes around the farm. I tried this and found it very effective for mitigating monkey attacks as they steer away from palces where snakes are around. Now the next issue is with bats. My technology background sparked some ideas and an online research got me in the right direction. With a raspberry pi and high freqency sound sensor and the bat detector project along with good quality speakers I managed to send the bats out of our farm land. Well the sqeaks of a hawk was enough to frighten the bats and that was pre recorded mp3 played through the speaker with a bit of amplification.

Yet another activity was to sprout a mango tree from tree cuttings, from a near by hotel when the KSEB people did some tree cropping on line touching, one cutting was taken by me and the cut end was applied with onion juice for 2 hours, aloevera gel overnight and finally planted in a pot with cocopeat and soil 1:1 mixture mixed with a ripe papaya which was leftover from a bat attack. Then every day at fixed time very small amount of water was applied and once weekly one day old rice brine which I take from home. 12th day new leaves were seen at multiple points and 29th day the plant in pot had sprouted flowers. A moment of real happiness.

Harvesting papaya fruit was the toughest task as it was taller than our standalone ladder and trying to climb the tree like a coconut climber does is not so easy as the plant is not that strong as a coconut and the stain that oozes out can create acute itching. Well using an old pvc pipe an extended mechanical arm was created by me. The tools used were gas torch, hand saw mini drill. The process was to cut the pipe into multiple pieces such that transportation would be easy. All pieces were given a heat treatment at one side to loosen the pvc and another pipe was pushed into this heated side such that once it sets we can eaisly assemble it after transporting. Then the final end was vertically sliced thrice to about 1 foot length to create the fingers these were heated and bent out and using a plastic thread the fingers where cross connected and the thread end was taken through the main pipes to the bottom most one where a sleeve was installed and end was tied to this sleeve. Now when we pull the sleeve down the fingers come together and when sleeve is released the fingers openup.

See it in action: https://youtu.be/wrVh7uBfBTY