Today was exploring more of AI tools and many more.

Using chat-gpt generated a prompt for leonardo ai to create a subtle scenery.

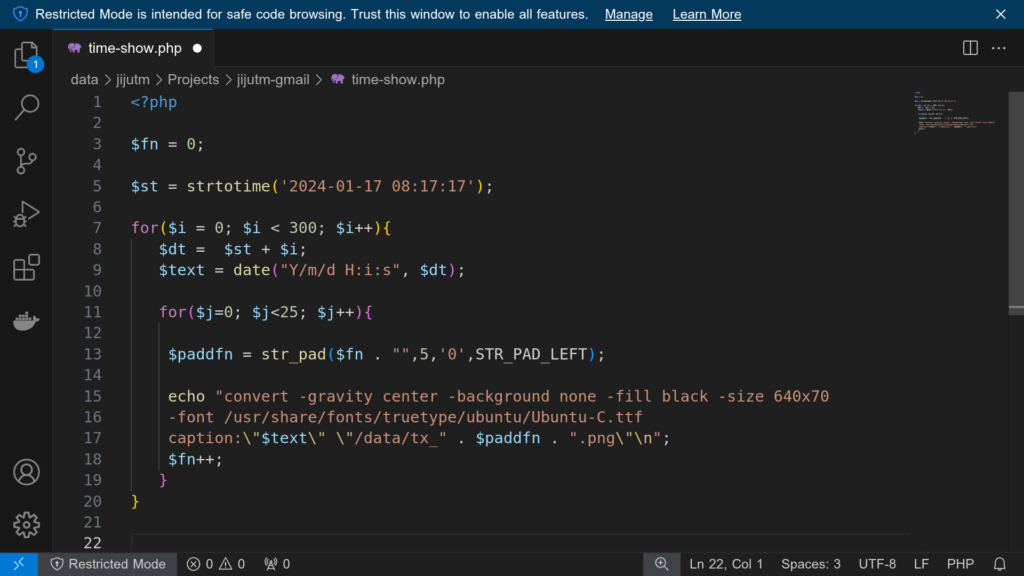

Using GIMP on Ubuntu the scene was upscaled and cropped to 810:1440 ie 9:16 resolution. Used AI Music to generate a 30 second lo-fi bgm.mp3. using a PHP script generated 840 lines in format fname brightness contrast with values in a gradual change. This information was used in a loop for invoking imagemagic convert and create 840 frames. finally ffmpeg -i “%04d.jpg” -i bgm.mp3 -r 30 dusk.mp4. since frame rate is 30 and frames are 840, the video is of length 28 seconds and can be shared on facebook as a reel. That was my target.

Prompt in Chat GPT:

Suggest a detailed prompt for leonardo ai to generate a scenic view of country side just before sunset and the sun is behind the viewer.

The response, trimmed to the relevant portion which was further given to Leonardo AI:

A breathtaking countryside landscape bathed in the warm glow of the golden hour, just before sunset. The sun is behind the viewer, casting long, soft shadows over rolling green hills. A winding dirt road leads through fields of wildflowers in shades of lavender, yellow, and white, gently swaying in the breeze. A rustic wooden fence lines the path, guiding the eye toward a quaint farmhouse with a red roof and smoke curling from its chimney. Lush trees with golden-hued leaves dot the horizon, their branches swaying gently. A calm river reflects the soft, pastel hues of the evening sky—lavender, peach, and rose gold—while birds soar gracefully in the distance. The scene exudes warmth, tranquility, and a sense of peaceful solitude.

This was of dimension 1472×832 though I had set 16:9 resolution in the Leonardo image generator app.

Since I am on XUbuntu here I used GIMP to first upscale. As height required was 1440 the width proportionally came to 2548 once the scaling was done, chose canvas size from the image menu. setting width to 810 and position to center, since I needed 9:16 for Facebook reel.

Now for progressive frame generation a short script was written. For which the max thresholds were identified using GIMP. Colors -> Brightness & Contrast then in the slider, manually tweaking until the image was fully black. tried to fix these values to easy to calculate. And arrived at Brightness -120 and Contrast + 60. With a frame rate of 30 per second, a 28 second video will need 840 frames. So applying that brightness is 0 to -120 in 840 frames which evaluates to reduce by 1 in every 7 frames, whereas contrast is 0 to 60 and that evaluates to increase of 1 in every 14 frames. This was implemented using php scripting.

<?php

/*

brightness 0 => -120 7:1

Contrast 0 => 60 14:1

frames 840

*/

$list = range(1,840);

$bt = 0;

$ct = 0;

$bv = 0;

$cv = 0;

foreach($list as $sn){

if($bt == 7){

$bv += 1;

$bt = 0;

}

if($ct == 14){

$cv += 1;

$ct = 0;

}

$bt++;

$ct++;

echo str_pad($sn, 4, '0', STR_PAD_LEFT)," $bv $cv","\n";

}

?>This was further run from the command line and the output captured in a text file. Further a while loop creates the frames using image magik convert utility.

php -q bnc.php > list.txt

mkdir fg

cat list.txt | while read fi bv cv; do convert scene.jpg -brightness-contrast -${bv}x${cv} fg/${fi}.jpg ; done

cd fg

ffmpeg -i %04d.jpg -i /home/jijutm/Downloads/bgm-sunset.mp3 -r 30 ../sunset-reel.mp4The bgm-sunset.mp3 was created using AI music generator and edited in audacity for special effects like fade in fade out etc.

Why this workflow is effective:

Automation: The PHP script and ImageMagick loop automate the tedious process of creating individual frames, saving a lot of time and effort.

Cost-effective: Using open-source tools like GIMP and FFmpeg keeps the cost down.

Flexibility: This approach gives a high degree of control over every aspect of the video, from the scenery to the music and the visual effects.

Efficient: By combining the strengths of different AI tools and traditional image/video processing software, this streamlined workflow is defined that gets the job done quickly and effectively.

The final reel on facebook page , see that also.