In today’s fast-paced tech world, flexibility and portability are paramount. As a developer, I’ve always sought a setup that allows me to code, manage cloud resources, and analyze data from anywhere. Recently, I’ve crafted a powerful and portable development environment using my Samsung Galaxy Tab S7 FE, Termux, and Amazon Web Services (AWS).

The Hardware: A Tablet Turned Powerhouse

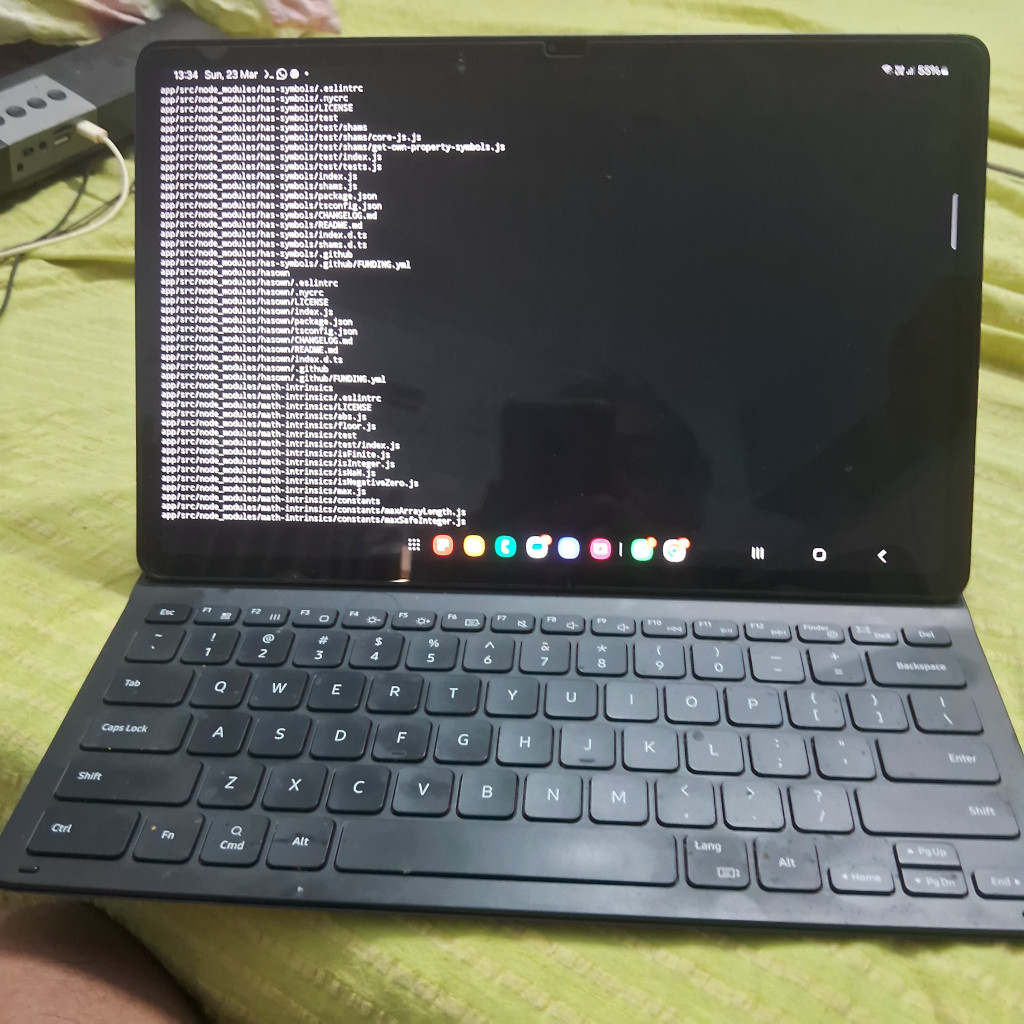

My setup revolves around the Samsung Galaxy Tab S7 FE, paired with its full keyboard book case cover. This tablet, with its ample screen and comfortable keyboard, provides a surprisingly effective workspace. The real magic, however, lies in Termux.

Termux: The Linux Terminal in Your Pocket

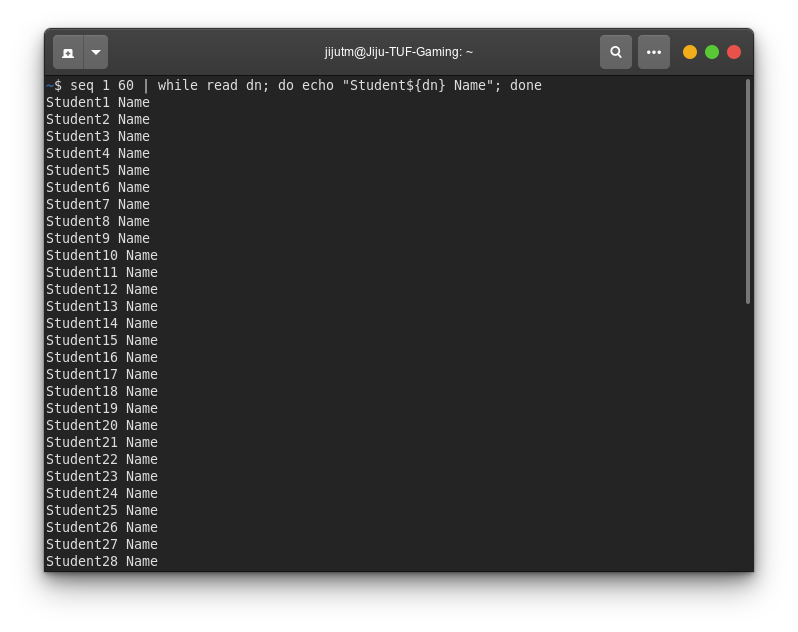

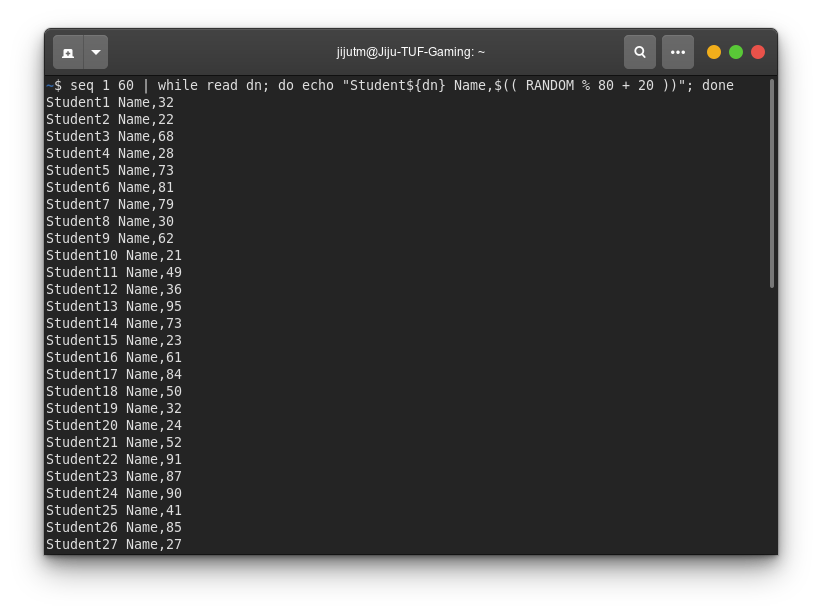

Termux is an Android terminal emulator and Linux environment app that brings the power of the command line to your mobile device. I’ve configured it with essential tools like:

ffmpeg: For multimedia processing.

ImageMagick: For image manipulation.

Node.js 22.0: For JavaScript development.

AWS CLI v2: To interact with AWS services.

AWS SAM CLI: For serverless application development.

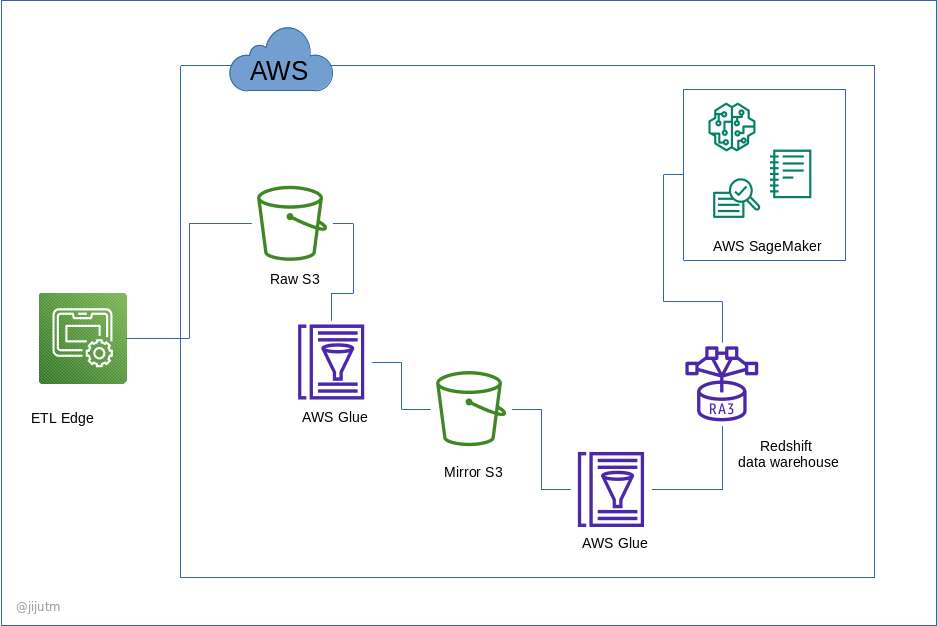

AWS Integration: Cloud Resources at Your Fingertips

To streamline my AWS interactions, I’ve created a credentials file within Termux. This file stores my AWS access keys, region, security group, SSH key path, and account ID, allowing me to quickly source these variables and execute AWS commands.

export AWS_DEFAULT_REGION=[actual region id]

export AWS_ACCESS_KEY_ID=[ACCESS KEY From Credentials]

export AWS_SECRET_ACCESS_KEY=[SECRET KEY from Credentials]

export AWS_SECURITY_GROUP=[a security group id which I have attached to my ec2 instance]

export AWS_SSH_ID=[path to my pem key file]

export AWS_ACCOUNT=[The account id from billing page]

source [path to the credentials.txt]

In the above configuration the security group id is actually used for automatically patching with my public ip with blanket access using shell commands.

currentip=$(curl --silent [my own what-is-my-ip clone - checkout the code ])

aws ec2 describe-security-groups --group-id $AWS_SECURITY_GROUP > ~/permissions.json

grep CidrIp ~/permissions.json | grep -v '/0' | awk -F'"' '{print $4}' | while read cidr;

do

aws ec2 revoke-security-group-ingress --group-id $AWS_SECURITY_GROUP --ip-permissions "FromPort=-1,IpProtocol=-1,IpRanges=[{CidrIp=$cidr}]"

done

aws ec2 authorize-security-group-ingress --group-id $AWS_SECURITY_GROUP --protocol "-1" --cidr "$currentip/32"The what-is-my-ip code on github

With this setup, I can seamlessly SSH into my EC2 instances:

ssh -o "UserKnownHostsFile=/dev/null" -o "StrictHostKeyChecking=no" -o IdentitiesOnly=yes -i $AWS_SSH_ID ubuntu@13.233.236.48 -vThis allows me to execute intensive tasks, such as heavy PHP code execution and log analysis using tools like Wireshark, remotely.

EC2 Instance with Auto-Stop Functionality

To optimize costs and ensure my EC2 instance isn’t running unnecessarily, I’ve implemented an auto-stop script. This script, available on GitHub ( https://github.com/jthoma/code-collection/tree/master/aws/ec2-inactivity-shutdown ), runs every minute via cron and checks for user logout or network disconnects. If inactivity exceeds 30 seconds, it automatically shuts down the instance.

Why This Setup Rocks

Portability: I can work from anywhere with an internet connection.

Efficiency: Termux provides a powerful command-line environment on a mobile device.

Cost-Effectiveness: The auto-stop script minimizes EC2 costs.

Flexibility: I can seamlessly switch between local and remote development.

Visuals

Conclusion

My portable development setup demonstrates the incredible potential of combining mobile technology with cloud resources. With termux and AWS, I’ve created a powerful and flexible environment that allows me to code and manage infrastructure from anywhere. This setup is perfect for developers who value portability and efficiency.