In the PHP world, we often encounter the age-old debate: globals vs. constants. This discussion pops up in various contexts, and one common battleground is how we store configuration values, especially sensitive ones like database connection strings. Should we use a global variable like $dsn or a defined constant like MySQL_DSN? Let’s dive into this, focusing on the specific example of a Data Source Name (DSN) for database connections.

The Contenders:

Global Variable ($dsn): A global variable, in this case, $dsn = "mysql://user:password@serverip/dbname", is declared in a scope accessible throughout your application.

Defined Constant (MySQL_DSN): A constant, defined using define('MySQL_DSN','mysql://user:password@serverip/dbname'), also provides application-wide access to the value.

The Pros and Cons:

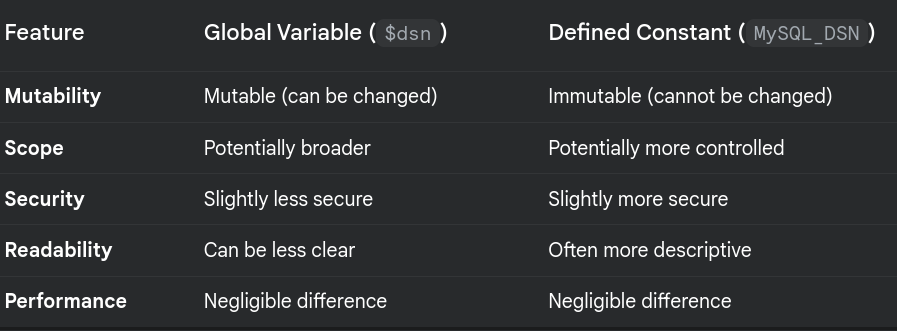

Analysis:

Mutability: Constants are immutable. Once defined, their value cannot be changed. This can be a significant advantage for security. Accidentally or maliciously modifying a database connection string mid-execution could have disastrous consequences. Globals, being mutable, are more vulnerable in this respect.

Scope: While both can be accessed globally, constants often encourage a more controlled approach. They are explicitly defined and their purpose is usually clearer. Globals, especially if used liberally, can lead to code that’s harder to reason about and maintain.

Security: The immutability of constants provides a slight security edge. It reduces the risk of the connection string being altered unintentionally or maliciously. However, neither approach inherently protects against all vulnerabilities (e.g., if your code is compromised). Proper input sanitization and secure coding practices are always essential.

Readability: Constants, by convention (using uppercase and descriptive names), tend to be more readable. MySQL_DSN clearly signals its purpose, whereas $dsn might require looking at its initialization to understand its role.

Performance: The performance difference between accessing a global variable and a defined constant is negligible in modern PHP. Don’t let performance be the deciding factor here.

Abstracting the MySQL Client Library:

Lets discuss about abstracting the MySQL client library. This is a fantastic idea, regardless of whether you choose globals or constants. Using an abstraction layer (often a class) allows you to easily switch between different database libraries (e.g., MySQLi, PDO) or even different connection methods without rewriting large portions of your application.

Here’s a basic example (using PDO, but the concept applies to other libraries):

class Database {

private static $pdo;

public static function getConnection() {

if (!isset(self::$pdo)) {

$dsn = defined('MySQL_DSN') ? MySQL_DSN : $GLOBALS['dsn']; // Check for constant first

try {

self::$pdo = new PDO($dsn);

self::$pdo->setAttribute(PDO::ATTR_ERRMODE, PDO::ERRMODE_EXCEPTION); // Good practice!

} catch (PDOException $e) {

die("Database connection failed: " . $e->getMessage());

}

}

return self::$pdo;

}

}

// Usage:

$db = Database::getConnection();

$stmt = $db->query("SELECT FROM users");

// ... process results ...Recommendation:

Definable constants are generally the preferred approach for database connection strings. Their immutability and improved readability make them slightly more secure and maintainable. Combine this with a well-designed database abstraction layer, and you’ll have a robust and flexible system.

Further Considerations:

Environment Variables: Consider storing sensitive information like database credentials in environment variables and retrieving them in your PHP code for production environments. This is a more secure way to manage configuration.

Configuration Files: For more complex configurations, using configuration files (e.g., INI, YAML, JSON) can be a better approach.

Using separate boolean constants like MYSQL_ENABLED and PGSQL_ENABLED to control which database connection is active is a very good practice. It adds another layer of control and clarity. And, as you pointed out, the immutability of constants is a crucial advantage for configuration values.

Here’s how you could integrate that into the previous example, along with some improvements:

<?php

// Configuration (best practice: store these in environment variables or a separate config file)

define('MYSQL_ENABLED', getenv('MYSQL_ENABLED') ?: 0); // Use getenv() for environment variables, fallback to 0

define('MYSQL_DSN', getenv('MYSQL_DSN') ?: 'user:password@server/database'); // Fallback value for development

define('PGSQL_ENABLED', getenv('PGSQL_ENABLED') ?: 0);

define('PGSQL_DSN', getenv('PGSQL_DSN') ?: 'user:password@server/database');

class Database {

private static $pdo;

private static $activeConnection; // Track which connection is active

public static function getConnection() {

if (!isset(self::$pdo)) {

if (MYSQL_ENABLED) {

$dsn = MYSQL_DSN;

$driver = 'mysql'; // Store the driver for later use

self::$activeConnection = 'mysql';

} elseif (PGSQL_ENABLED) {

$dsn = PGSQL_DSN;

$driver = 'pgsql';

self::$activeConnection = 'pgsql';

} else {

die("No database connection enabled."); // Handle the case where no connection is configured.

}

try {

self::$pdo = new PDO($driver.':'.$dsn); // Include the driver in the DSN string.

self::$pdo->setAttribute(PDO::ATTR_ERRMODE, PDO::ERRMODE_EXCEPTION);

// More PDO settings if needed (e.g., charset)

} catch (PDOException $e) {

die("Database connection failed: " . $e->getMessage());

}

}

return self::$pdo;

}

public static function getActiveConnection() { // Added a method to get the active connection type

return self::$activeConnection;

}

}

// Example usage:

$db = Database::getConnection();

if (Database::getActiveConnection() === 'mysql') {

// MySQL specific operations

$stmt = $db->query("SELECT FROM users");

} elseif (Database::getActiveConnection() === 'pgsql') {

// PostgreSQL specific operations

$stmt = $db->query("SELECT FROM users"); // Example: Adapt query if needed.

}

// ... process results ...

?>Analyzing the above code snippet, there are few key improvements:

Environment Variables: Using getenv() is the recommended approach for storing sensitive configuration. The fallback values are useful for development but should never be used in production.

Driver in DSN: Including the database driver (mysql, pgsql, etc.) in the DSN string ($driver.':'.$dsn) is generally the preferred way to construct the DSN for PDO. It makes the connection more explicit.

Active Connection Tracking: The $activeConnection property and getActiveConnection() method allow you to easily determine which database type is currently being used, which can be helpful for conditional logic.

Error Handling: The die() statement now provides a more informative message if no database connection is enabled. You could replace this with more sophisticated error handling (e.g., logging, exceptions) in a production environment.

Clearer Configuration: The boolean constants make it very clear which database connections are enabled.

Using a .env file (or similar mechanism) combined with environment variable sourcing is a fantastic way to manage different environments (development, testing, staging, production) on a single machine or AWS EC2 instance. It drastically reduces the risk of accidental configuration errors and simplifies deployment process.

Here’s a breakdown of why this approach is so effective:

Benefits of .env files and Environment Variable Sourcing:

Separation of Concerns: Configuration values are separated from your application code. This makes your code more portable and easier to maintain. You can change configurations without modifying the code itself.

Environment-Specific Settings: Each environment (dev, test, prod) can have its own .env file with specific settings. This allows you to easily switch between environments without manually changing configuration values in your code.

Security: Sensitive information (API keys, database passwords, etc.) is not stored directly in your codebase. This is a significant security improvement.

Simplified Deployment: When deploying to a new environment, you just need to copy the appropriate .env file to the server and source it. No need to modify your application code.

Reduced Administrative Errors: By automating the process of setting environment variables, you minimize the risk of human error. No more manually editing configuration files on the server.

Version Control: You can exclude the .env file from version control (using .gitignore) to prevent sensitive information from being accidentally committed to your repository. However, it’s a good practice to include a .env.example file with placeholder values for developers to use as a template.

How it Works:

.envFile: You create a.envfile in the root directory of your project. This file contains key-value pairs representing your configuration settings:

MYSQL_ENABLED=1

MYSQL_DSN=user:password@www.jijutm.com/database_name

API_KEY=your_secret_api_key

DEBUG_MODE=true- Sourcing the

.envfile: You need a way to load the variables from the.envfile into the server’s environment. There are several ways to do this:source .env(Bash): In a development or testing environment, you can simply runsource .envin your terminal before running your PHP scripts. This will load the variables into the current shell’s environment.dotenvLibrary (PHP): For production environments, using a library likevlucas/phpdotenvis recommended. This library allows you to load the.envfile programmatically in your PHP code:<?php require_once __DIR__ . '/vendor/autoload.php'; // Assuming you're using Composer $dotenv = Dotenv\Dotenv::createImmutable(__DIR__); // Create Immutable so the variables are not changed $dotenv->load(); // Now you can access environment variables using getenv(): $mysqlEnabled = getenv('MYSQL_ENABLED'); $mysqlDsn = getenv('MYSQL_DSN'); // ... ?>Web Server Configuration: Some web servers (like Apache or Nginx) allow you to set environment variables directly in their configuration files. This is also a good option for production. - Accessing Environment Variables: In your PHP code, you can use the

getenv()function to retrieve the values of the environment variables:

$mysqlEnabled = getenv('MYSQL_ENABLED');

if ($mysqlEnabled) {

// ... connect to MySQL ...

}Example Workflow:

- Development: Developer creates a

.envfile with their local settings and runssource .envbefore running the application. - Testing: A

.env.testingfile is created with the testing environment’s settings. The testing script sources this file before running tests. - Production: The production server has a

.envfile with the production settings. The web server or a deployment script sources this file when the application is deployed.

By following this approach, you can create a smooth and efficient workflow for managing your application’s configuration across different environments. It’s a best practice that significantly improves the maintainability and security of your PHP applications.