Requirements (My hallucinations):

Design and architect a highly available, large user base system which is going to be used by the National Highways, the regular employees updating photos of WIP on different stages and when work completed, archive all images after creating a timeline video. WIP sequence should keep the most latest photo thumbnail linked to a project blog page, with a gallery linking to the last photo per day. Post-processing of completed work can take even up to a week giving more importance to the lowest cost possible. The system should be capable of handling hundreds of thousands of high-quality mobile photographs per day. Runtime costs should be as low as possible. For each WIP a minimum of one photograph in six hours is desired.

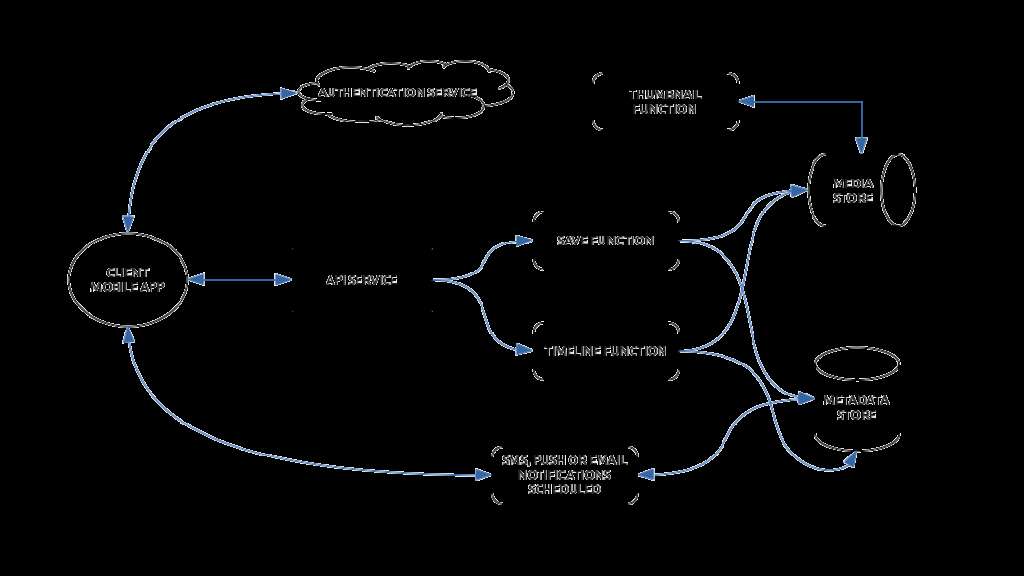

Solution on AWS (My views):

Application to be developed in some kind of single-page-app with progressive-web-app support, javascript and CSS libraries. This can be hosted on the AWS S3 bucket with Cloud Front default origin pointed here. The standard secure approach of HTTPS (Redirect HTTP to HTTPS), OAI and custom domain with a certificate from ACM is recommended. The dynamic part uses Cognito User Pool, Amazon API Gateway (regional), Lambda, STS etc. API Gateway stage should be the behaviour point for the route.

When the user logs into the system, a token from Cognito is cached and whenever the time series photo is being taken, the user will be asked to choose the project details. This should be filtered down using geo-fencing and matching against the geospatial database of the Agency.

Force the mobile app to take only landscape photos. Since the portrait pictures will look bad when stitched to video. Photographs should be stamped with Geo-coordinates and custom headers about the project reference as well as the department employee and other device-specific headers. Prompt user to take a photo every 6 hours. The mobile application will essentially query the API Gateway + Lambda which uses Assume Role API of STS to fetch temporary credentials and S3 Bucket Name from the configuration. This role should have only write permission (write, write multipart, list parts) to objects in the specific bucket. This should utilise the temporary credentials to use multipart upload to S3. The key prefix should be randomized for 4 characters.

S3 file created events can trigger a Lambda service – depending on the file size, create thumbnails using Lambda or EC2 Spot Instance. Essentially three sizes are recommended, for the final video, gallery images, latest image. Once thumbnails are created store them in the corresponding S3 Bucket. With the raw high quality and video on Glacier Tier, smallest thumbnail overwriting the project/latest.jpg. Glacier keys are inserted into a DynamoDB table with SQS buffering and project partition key and timestamp of the image sort key.

Create another Lambda which is invoked once in 6 hours from the Cloud Watch Events scheduler. This will identify those projects which skipped “the once in six-hour desired” frequency. Should consider and differentiate between projects which are working for round the clock, and partial. If required, fire escalation notification through SNS.

Create and expose an API to trigger a WIP to Project Completion. Trigger a step function which first calls Lambda to identify those files to retrieve from Glacier, pass on to the second function which will issue batch retrieval to Glacier, and when completed, use AWS Elemental Media Convert to create the timeline video. Export the file index as a JSON to S3 glacier, and delete it from DynamoDB. Update all gallery images with a specific tag, and creation timestamp, such that a lifecycle rule will delete them from the S3 standard after a month. Finally, update the latest thumbnail with a long expiry on the response header meta. The API should be immutable, and should not run twice on the same project and create failures.

There is another possibility for the status change, which is to use an EC2 Spot instance, which could work out to be much cheaper. But can be really complicated. First, need to calculate the disk space requirements from the image sizes and estimated final video size. From this estimate, the instance sizing should be determined dynamically. Spot instance should auto-provision on startup by installing FFmpeg through cloud-init, such that we can keep using the latest AMI in the distribution. Spot price quoting also should be logically automated through a lambda. The best performance can be got by utilizing an instance with an ephemeral store and gigabit network. This could be further enhanced with S3 VPC Endpoints and save on interprocess data transfer charges – with enhanced security.

Use AWS SAM or CDK to manage and deploy the infrastructure and keep versioning in git or svn.

For a broad view, the high-level architecture is added as it was requested by many viewers.

Architecture Outline:

Further enhancements:

This could be converted to a multitenant saas project to provide the facilities to any kind of builders who want transparency in their commitments, not just National Highways.

And plugins for popular CMS like WordPress, Joomla etc could be created for integration into customer portals.