In this tutorial, I’ll walk you through creating a timelapse effect video that transitions from dark to bright, all from a single high-resolution photo. Using a Samsung Galaxy M14 5G, I captured the original image, then manipulated it using Linux command-line tools like ImageMagick, PHP, and ffmpeg. This approach is perfect for academic purposes or for anyone interested in experimenting with video creation from static images. Here’s how you can achieve this effect. And note that this is just an academic exploration and to be used as a professional tool the values and frames should be defined with utmost care.

Basics was to find the perfect image, and crop it to 9:16 since I was targetting facebook reels and the 50 MP images taken on Samsung Galaxy M14 5G are at 4:3 with 8160×6120 and Facebook reels or YouTube shorts follow the format of 9:16 and 1080×1920 or proportionate dimensions. My final source image was 1700×3022 added here for reference. Had to scale it down to keep inside the blog aesthetics.

Step 1: Preparing the Frame Rate and Length

To begin, I decided on a 20-second video with a frame rate of 25 frames per second, resulting in a total of 500 frames. Manually creating the 500 frames was tedious and any professionals would use some kind of automation. Being a devops enthusiast and a linux fanatic since 1998 my choice was shell scripting. But addiction to php as an aftermath of usage since 2002 kicked up inside me and the following code nippet was the outcome.

Step 2: Generating Brightness and Contrast Values Using PHP

The next step was to create an array of brightness and contrast values to give the impression of a gradually brightening scene. Using PHP, I mapped each frame to an optimal brightness-contrast value. Here’s the PHP snippet I used:

<?php

$dur = 20;

$fps = 25;

$frames = $dur * $fps;

$plen = strlen(''.$frames) + 1;

$val = -50;

$incr = (60 / $frames);

for($i = 0; $i < $frames; $i++){

$pfx = str_pad($i, $plen, '0', STR_PAD_LEFT);

echo $pfx, " ",round($val,2),"\n";

$val += $incr;

}

?>Being in ubuntu the above code saved as gen.php and after updating the values for duration and framerate this was executed from the cli and output redirected to a text file values.txt with the following command.

php -q gen.php > values.txt Now to make things easy, the source file was copied as src.jpg into a temporary folder and a sub-folder ‘anim’ was created to hold the frames. Here I already had a script which will resume from where left off depending on the situation. the script is as follows.

#!/bin/bash

gdone=$(find ./anim/ -type f | grep -c '.jpg')

tcount=$(grep -c "^0" values.txt)

todo=$(( $tcount - $gdone))

echo "done $gdone of ${tcount}, to do $todo more "

tail -$todo values.txt | while read fnp val

do

echo $fnp

convert src.jpg -brightness-contrast ${val} anim/img_${fnp}.jpg

doneThe process is quite simple, first code line defines a var gdone by counting ‘.jpg’ files in the ‘anim’ sub-directory and then taking total count from values.txt the difference is to be done the status is echoed to output and a loop is initiated with reading the last todo lines from values.txt and executing the conversion using the convert utility of imagemagick. In case this needs to be interrupted, I just close the terminal window from xwindows, as a subsequent execution will continue from where leftoff. Once this is completed, the frames are stitched together using ffmpeg using the following commad.

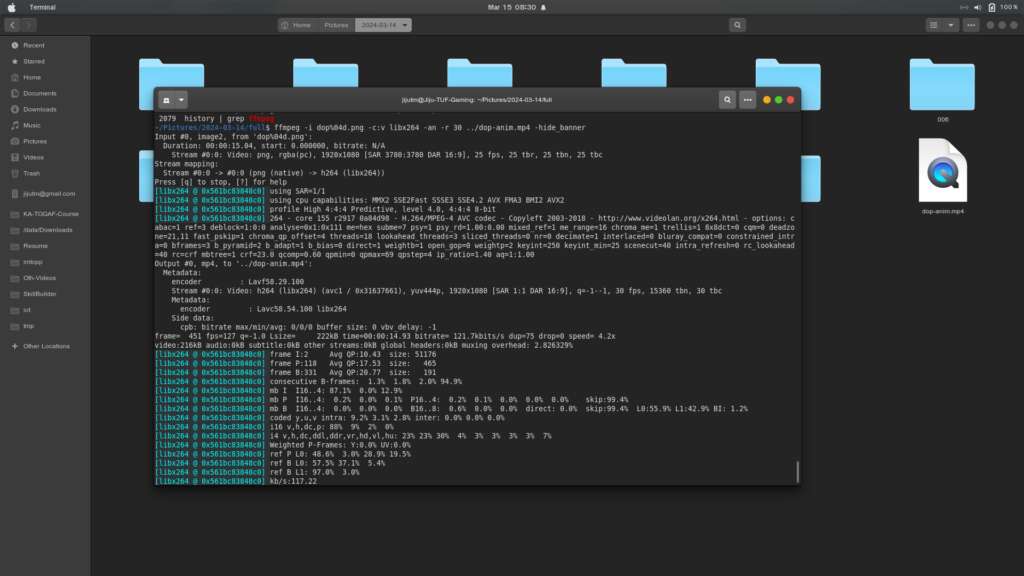

ffmpeg -i anim/img_%04d.jpg -an -y ../output.mp4The filename pattern %04d is decided from the width of number of frames plus 1 as in the php code the var $plen on code line 4 is taken for the str_pad function input padd length.

The properties of final output generated by ffmpeg is as follows. Note the dimensions, duration and frame rate do comply as decided on startup.