Introduction

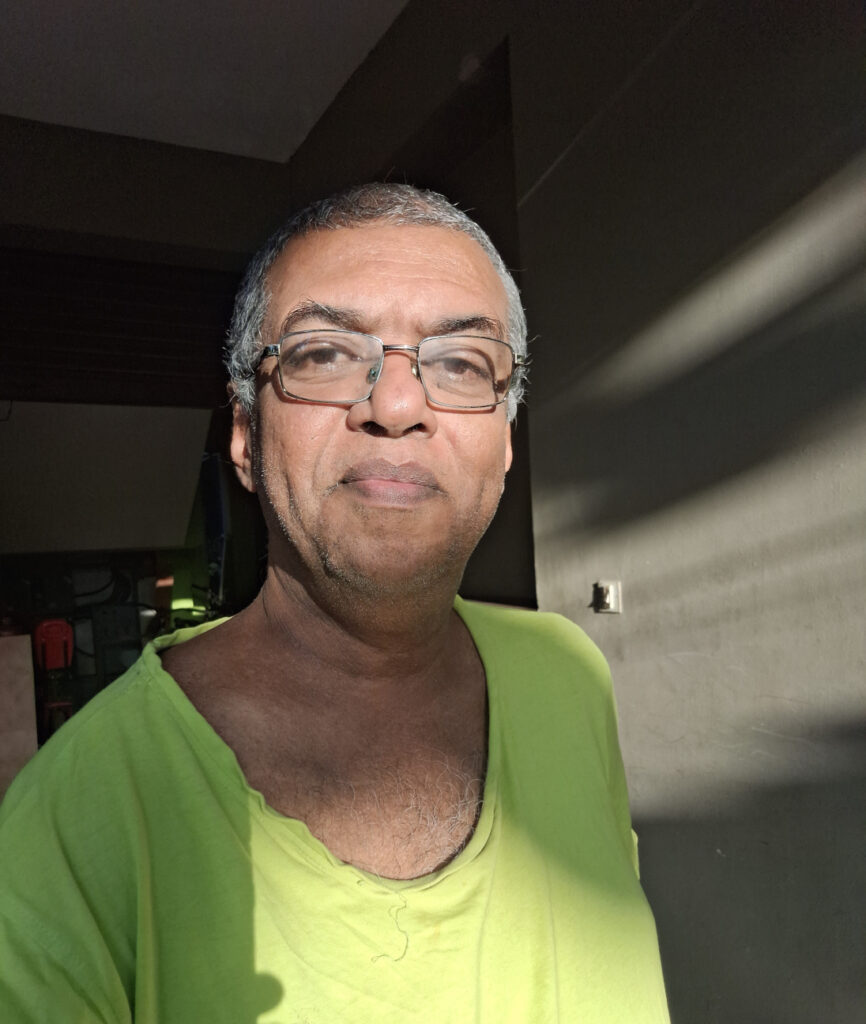

In this blog post, I’ll walk you through a personal project that combines creative image editing with scripting to produce an animated video. The goal was to take one image from each year of my life, crop and resize them, then animate them in a 3×3 grid. The result is a visually engaging reel targeted for Facebook, where the images gradually transition and resize into place, accompanied by a custom audio track.

This project uses a variety of tools, including GIMP, PHP, LibreOffice Calc, ImageMagick, Hydrogen Drum Machine, and FFmpeg. Let’s dive into the steps and see how all these tools come together.

Preparing the Images with GIMP

The first step was to select one image from each year that clearly showed my face. Using GIMP, I cropped each image to focus solely on the face and resized them all to a uniform size of 1126×1126 pixels.

I also added the year in the bottom-left corner and the Google Plus Code (location identifier) in the bottom-right corner of each image. To give the images a scrapbook-like feel, I applied a torn paper effect around the edges. Which was generated using Google Google Gemini using prompt “create an image of 3 irregular vertical white thin strips on a light blue background to be used as torn paper edges in colash” #promptengineering

Key actions in GIMP:

- Crop and resize each image to the same dimensions.

- Add text for the year and location.

- Apply a torn paper frame effect for a creative touch.

Organizing the Data in LibreOffice Calc

Before proceeding with the animation, I needed to plan out the timing and positioning of each image. I used LibreOffice Calc to calculate:

- Frame duration for each image (in relation to the total video duration).

- The positions of each image in the final 3×3 grid.

- Resizing and movement details for each image to transition smoothly from the bottom to its final position.

Once the calculations were done, I exported the data as a JSON file, which included:

- The image filename.

- Start and end positions.

- Resizing parameters for each frame.

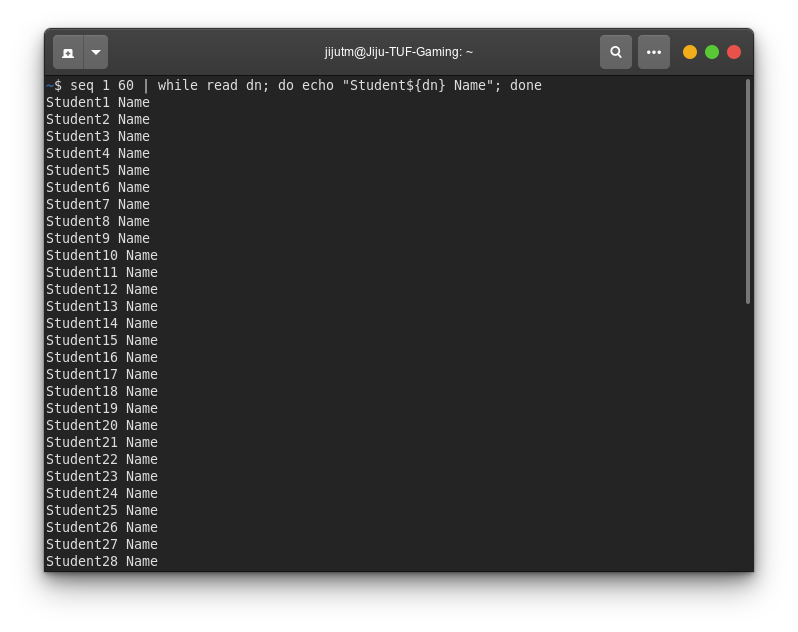

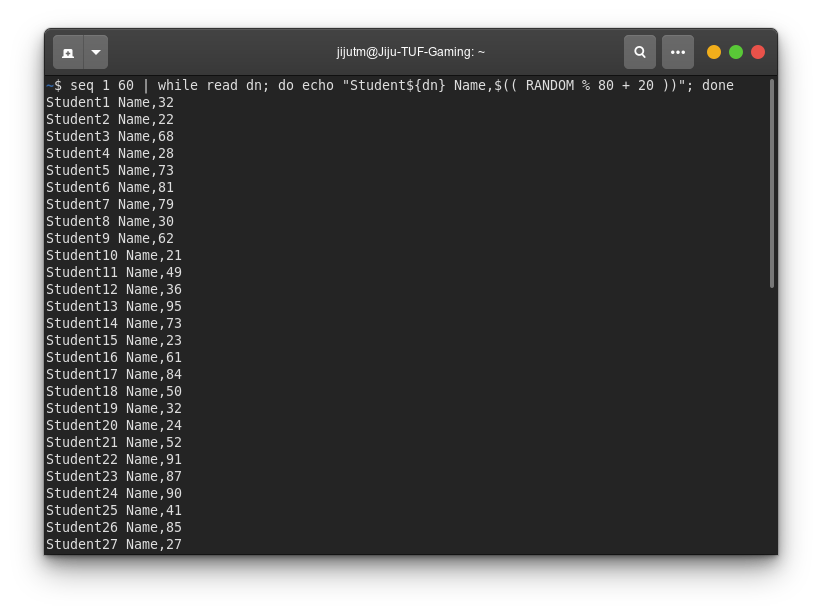

Automating the Frame Creation with PHP

Now came the fun part: using PHP to automate the image manipulation and generate the necessary shell commands for ImageMagick. The idea was to create each frame of the animation programmatically.

I wrote a PHP script that:

- Reads the JSON file and converts it to PHP arrays, which were manually hard-coded into the generator script. This is to define the positioning and resizing data.

- Generates ImageMagick shell commands to:

- Place each image on a 1080×1920 blank canvas.

- Resize each image gradually from 1126×1126 to 359×375 over several frames.

- Move each image from the bottom of the canvas to its final position in the 3×3 grid.

Here’s a snippet of the PHP code that generates the shell command for each frame:

This script dynamically generates ImageMagick commands for each image in each frame. The resizing and movement of each image happens frame-by-frame, giving the animation its smooth, fluid transitions.

Step 4: Creating the Final Video with FFmpeg

Once the frames were ready, I used FFmpeg to compile them into a video. Here’s the command I referred, for the exact project the filnenames and paths were different.

ffmpeg -framerate 30 -i frames/img_%04d.png -i audio.mp3 -c:v libx264 -pix_fmt yuv420p -c:a aac final_video.mp4This command:

- Takes the image sequence (

frames/img_0001.png,frames/img_0002.png, etc.) and combines them into a video. - Syncs the video with a custom audio track created in Hydrogen Drum Machine.

- Exports the final result as

final_video.mp4, ready for Facebook or any other platform.

Step 5: The Final Touch — The 3×3 Matrix Layout

The final frame of the video is particularly special. All nine images are arranged into a 3×3 grid, where each image gradually transitions from the bottom of the screen to its position in the matrix. Over the course of a few seconds, each image is resized from its initial large size to 359×375 pixels and placed in its final position in the grid.

This final effect gives the video a sense of closure and unity, pulling all the images together in one cohesive shot.

Conclusion

This project was a fun and fulfilling exercise in blending creative design with technical scripting. Using PHP, GIMP, ImageMagick, and FFmpeg, I was able to automate the creation of an animated video that showcases a timeline of my life through images. The transition from individual pictures to a 3×3 grid adds a dynamic visual effect, and the custom audio track gives the video a personalized touch.

If you’re looking to create something similar, or just want to learn how to automate image processing and video creation, this project is a great starting point. I hope this blog post inspires you to explore the creative possibilities of PHP and multimedia tools!

The PHP Script for Image Creation

Here’s the PHP script I used to automate the creation of the frames for the animation. Feel free to adapt and use it for your own projects:

<?php

// list of image files one for each year

$lst = ['2016.png','2017.png','2018.png','2019.png','2020.png','2021.png','2022.png','2023.png','2024.png'];

$wx = 1126; //initial width

$hx = 1176; //initial height

$wf = 359; // final width

$hf = 375; // final height

// final position for each year image

// mapped with the array index

$posx = [0,360,720,0,360,720,0,360,720];

$posy = [0,0,0,376,376,376,752,752,752];

// initial implant location x and y

$putx = 0;

$puty = 744;

// smooth transition frames for each file

// mapped with array index

$fc = [90,90,90,86,86,86,40,40,40];

// x and y movement for each image per frame

// mapped with array index

$fxm = [0,4,8,0,5,9,0,9,18];

$fym = [9,9,9,9,9,9,19,19,19];

// x and y scaling step per frame

// for each image mapped with index

$fxsc = [9,9,9,9,9,9,20,20,20];

$fysc = [9,9,9,10,10,10,21,21,21];

// initialize the file naming with a sequential numbering

$serial = 0;

// start by copying the original blank frame to ramdisk

echo "cp frame.png /dev/shm/mystage.png","\n";

// loop through the year image list

foreach($lst as $i => $fn){

// to echo the filename such that we know the progress

echo "echo '$fn':\n";

// filename padded with 0 to fixed width

$newfile = 'frames/img_' . str_pad($serial, 4,'0',STR_PAD_LEFT) . '.png';

// create the first frame of an year

echo "composite -geometry +".$putx."+".$puty." $fn /dev/shm/mystage.png $newfile", "\n";

$tmx = $posx[$i] - $putx;

$tmy = $puty - $posy[$i];

// frame animation

$maxframe = ($fc[$i] + 1);

for($z = 1; $z < $maxframe ; $z++){

// estimate new size

$nw = $wx - ($fxsc[$i] * $z );

$nh = $hx - ($fysc[$i] * $z );

$nw = ($wf > $nw) ? $wf : $nw;

$nh = ($hf > $nh) ? $hf : $nh;

$tmpfile = '/dev/shm/resized.png';

echo "convert $fn -resize ".$nw.'x'.$nh.'\! ' . $tmpfile . "\n";

$nx = $putx + ( $fxm[$i] * $z );

$nx = ($nx > $posx[$i]) ? $posx[$i] : $nx;

if($posy[$i] > $puty){

$ny = $puty + ($fym[$i] * $z) ;

$ny = ($ny > $posy[$i]) ? $posy[$i] : $ny ;

}else{

$ny = $puty - ($fym[$i] * $z);

$ny = ($posy[$i] > $ny) ? $posy[$i] : $ny ;

}

$serial += 1;

$newfile = 'frames/img_' . str_pad($serial, 4,'0',STR_PAD_LEFT) . '.png';

echo 'composite -geometry +'.$nx.'+'.$ny." $tmpfile /dev/shm/mystage.png $newfile", "\n";

}

// for next frame use last one

// thus build the final matrix of 3 x 3

echo "cp $newfile /dev/shm/mystage.png", "\n";

}