It was in 2016 that a friend approached me with a requirement of a solution. They were receiving video files with high resolution into an ftp server which was maintained by the media supplier. They had created an in-house locally hosted solution to show these to the news operators to preview video files and decide where to attach them. They were starting to spread out their news operational desk to multiple cities and wanted to migrate the solution to the cloud, which they did promptly by lift and shift and the whole solution was reconfigured on a large Ec2 instance which had custom scripts to automatically check the FTP location and copy any new media to their local folders. When I was approached, they were experiencing some sluggish streaming from the hosted Ec2 instance as the media files were being accessed from different cities at the same time. Also, the full high-definition videos had to be downloaded for the preview. They wanted to optimize bandwidth utilization and improve the operator’s response times.

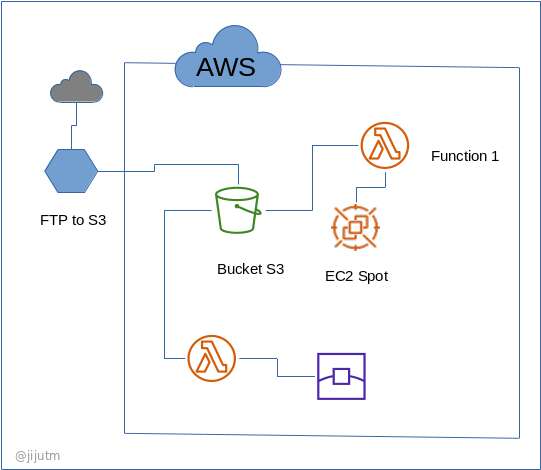

We had a lot of brainstorming and finalized that subsampling should be done using FFmpeg to reduce the resolution to 120p for the preview and provide link to hd video which could be embedded into their New CMS. An FTP poller running on an on-premises Linux server would check for new files and as soon as we got stable files, ie files which are having no change in filesize on successive polls would be copied to an AWS S3 Bucket. This was complimented with an AWS Serverless solution using a lambda written in NodeJs and uses a statically compiled FFmpeg which I found by some maintainers on the Internet. S3 file created event triggered the lambda. Once subsampled, the thumb file would be copied back to S3. After a lot of trial and error we could handle some files, but alas the lambda was failing when file sizes exceeded 420Mb. Alas, lambda /tmp was only 512MB and I had to circumvent this which was handled by lambda checking the file size and if exceeds 400Mb, an EC2 spot instance is triggered with the file name into the user-data parameter. The EC2 was launched from a golden ami which had the full FFmpeg installed. We had to implement some kind of authentication which was through CloudFront and lambda using basic authentication. There was about 80% of cost reduction in the first phase, and 86% reduced cost after switching to S3 vpc endpoints both compared to the original large EC2 solution.

The architecture is as follows.

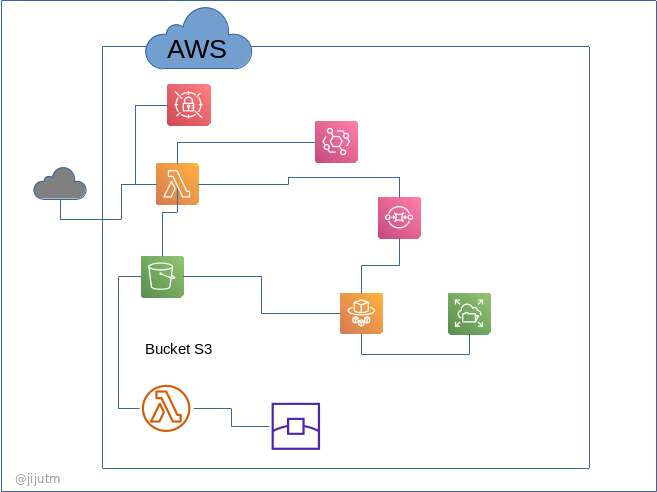

After all these years, one of my associates had asked me how would I approach the same solution with the current exposure and experience, would I bring in some changes to the architecture and why should those changes be considered. The thought process has been on since then and an outline for a better solution is as follows.

The FTP poller would be shifted into the AWS environment and lambda would be written using python to poll and copy media files onto S3 also do subsampling of 400mb and less size files and push those above 400MB into an SQS queue. By moving poller into AWS VPC, the write to S3 will also benefit by utilizing the VPC Endpoint. This lambda is triggered using Amazon Event Bridge triggering every minute. SQS would generate events consumed by AWS Fargate which uses AWS EFS for a very high space as a scratch disk and state data as well as final media files saved on S3. Secrets manager is used to storing the FTP credentials. Operators could be still on basic authentication. This new solution might be better off and a cost savings of another 3-4 % is expected as EKS Fargate is running instead of spot instances and other factors.

Conclusion

There are some supporting factors missing as this project is a bit old and I no longer have access to the billing history tabs. Creative enhancements could compliment and increase security and accountability with some minor tweaks here and there.